Depth of field in VR

I have always been very fascinated by depth of field in computed graphics. For me, it often defines photo realism, mimicking the shortcomings of a real camera. Naturally it is a poor match for interactive applications because the computer doesn’t know what the user is looking at, but I’ve tried to squeeze in depth of field in as many of the Mediocre games as I could get away with. In Smash Hit I wanted to use it for everything, but Henrik though it looked too weird and made it harder to aim (he was probably right), so we ended up only enabling only it in the near field. In Does not Commute and PinOut, which both have fixed camera angles I’m doing a wonderful trick, enabling a slight depth of field by seamlessly blurring the upper and lower parts of the screen. This is super cheap and takes away depth artefacts completely.

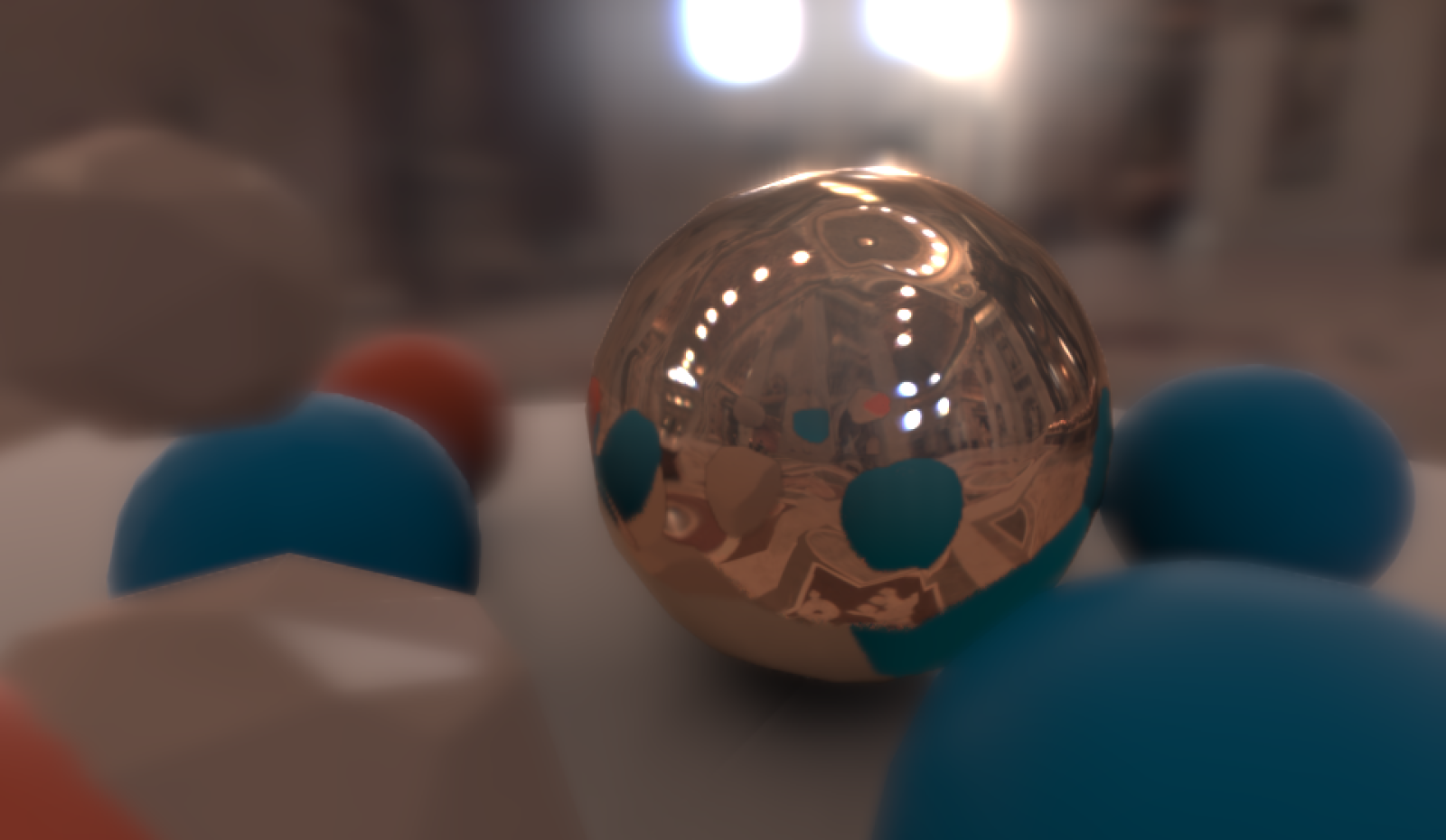

Anyway, since I’m so obsessed with depth of field I’ve started experimenting with it in VR. This has opened up a whole can of new problems and frustrations and I’d like to share some of my findings so far.

When I first tried it out with a fixed focal length it just looked weird and I couldn’t really put my finger on why it was so different from a flat screen. Being able to focus on the blur itself gives an extremely artificial look. Some people say your eyes can’t focus on different things in VR because the screen is always at the same distance from your eyes. This is only partly correct. You can still turn your eyes independently in VR. A lot of what is focus in real life is not related to the lens in the eye, but the angle of your eyes (vergence). This is what causes double vision behind or in front of what you’re focusing on and is probably a far more important depth cue than the blur itself. This is already in VR “for free”, it wasn’t until I tried depth of field in VR that I understood why all VR experiences I’ve tried has been a bit “messy”. Like a three dimensional clutter of too much information. When the depth of field is there and coincides with the double vision that is already there it gives a certain calmness to the image that is very pleasant.

Using depth of field as tool for directing the viewers attention towards a specific area is probably never going to work in VR. I’m still not sure why it works so extremely well in 2D but fails so miserably in VR, but it’s probably because the viewer in VR can in fact “focus” on the out of focus areas by adjusting the angle of the eyes. However, depth of field could still add a lot to VR by removing the visual clutter, not really adding anything new but making the experience less painful.

In order to make an adaptive depth of field, one that adjusts the focal length dynamically, I’ve implemented a system that is pretty similar to what has been used in (D)SLR cameras for decades – a set of focus points that are all weighed together, making the central points more dominant. For each focus point I shape cast a small sphere from the camera and record the hit distance. The reason I’m using sphere shape casting instead of raycasting is that I want focus points to ignore minor gaps between geometry. It also gives smoother focus transitions when moving the camera.

The focus point system works relatively well, but it tends to ignore small objects in the foreground, not because the shape cast will miss them (it does not) but because very few of them hit, only making a minor contribution to the final focal length. To overcome this I introduced a minimum and maximum forced focus range, in which everything is in focus. We are now leaving physical territory, because with a real lens there is only a singular depth where objects are in focus. However, a forced focus range is definitely not any less accurate then having focus everywhere, so who cares. The forced focus range starts with the focus point-computed focal length. For any focus point closer than that I simply adjust the range to include that distance. This modification turned out pretty good. It tends to keep most objects in the center area of the screen in focus all the time, so to some extent it cancels out the whole point of adding depth of field, but having out of focus objects in the peripheral vision and behind the main objects in the center gives a much more pleasant looking image.

It does have some drawbacks of course. You can still focus on the blur, but it really only looks weird when it happens in the near field, which with the focus range modification can only happen if you look away from the center (and honestly, the lenses in todays HMDs are so bad that everything is blurry there anyway).

So is it worth the hassle? I’m not sure, but I think so. When it works it looks truly awesome, and when it fails it’s definitely annoying. I’ll keep experimenting with this one.

Finally, here is how I do the actual depth of field. Probably nothing new in there, but everyone does it a little differently. Here is my version:

1) Fill up the DOF (depth of field) buffer using the final composited image before bloom and tone mapping. Store in in an RGBA texture, where the alpha channel represents the amount of blur. I use a half size texture for this. To compute the blur amount, I’m using the formula k*(1/focalPoint-1/distance).

2) Blur the alpha channel of the DOF buffer horizontally and vertically. The size of the blur is based on alpha value. The blur must be depth aware, so that: A) Fragments further away than the current fragment do not contribute to the blur. This prevents objects behind something to blur what is in front, and B) Fragments that are closer do contribute, but only scaled by their alpha value. This will make blurry objects in front of something sharp bleed out over their physical extent.

3) Blur the RGB values of the DOF buffer horizontally and vertically based on the blurred alpha. This pass must also be depth aware in exactly the same way as B) above.

4) Mix the RGB values of the DOF buffer into the final image using the blurred alpha channel.